Computex 2024 - AMD Keynote with Jabs at NVIDIA

Author: Dennis GarciaIntroduction

When I first started attending trade shows I was 100% focused on establishing new contacts and checking out the products being presented. It wasn’t until some industry friends invited me to attend a CES Keynote that changed my outlook and the importance of these presentations.

As you can imagine the Keynote speech is geared towards promoting the company the speaker is representing but, also extends beyond that to include current trends in the marketplace which ultimately sets the stage for the overall theme of the trade show.

This is what happened during CES presentation where the speaker established the notion of “The Internet of Things (IOT)” which has since become a standard term in the industry.

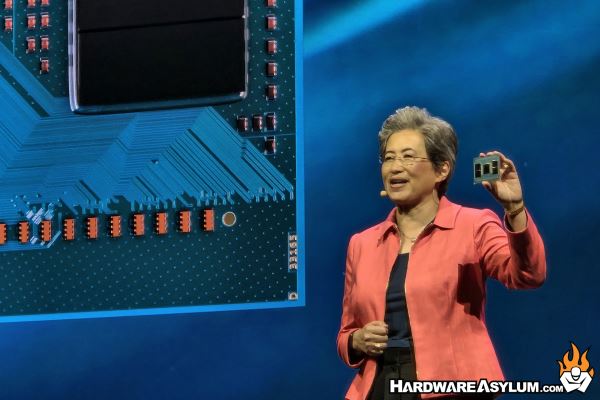

During the opening remarks from the Keynote a historical map of Computex was shown which spoke to the focus of the event has changed from when it was created. For those unfamiliar, Computex was created when Taiwan was a manufacturing powerhouse and was looking for a way to attract international buyers and make more sales. At the time Computex was PC focused, and in many ways that has not changed.

When I started attending Computex it was the dawn of Cloud computing which was followed up by IOT and now Connecting AI.

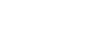

Dr Lisa Su was giving the opening keynote and did an excellent overview of how AMD solutions are shaping the AI revolution and enhancing desktop PC and datacenter solutions.

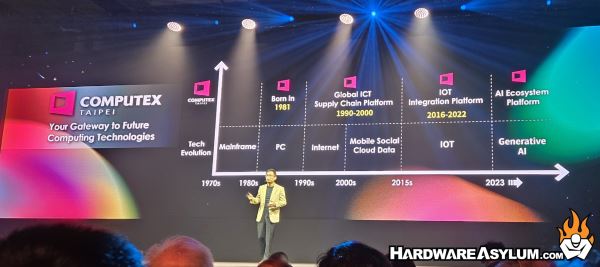

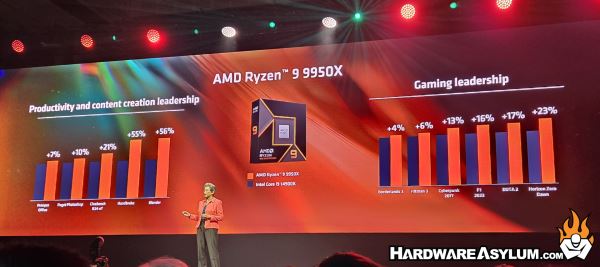

I was really impressed to see that the presentation started with the latest Ryzen 5 CPU marking it as being the fastest desktop CPU with availability sometime in July 2024. There was also a “not so” subtle jab at Intel taking about the socket longevity and that there is 148 CPUs available for the AM4 socket and currently 38 CPUs available for AM5 with no projected change until after 2027.

The discussion changed to AI and Datacenter solutions including the monster Epyc processor and AMD Instinct MI300X AI processor.

This is where the focus changes from Intel to NVIDIA along with blatant jabs at everything NVIDIA has been doing.

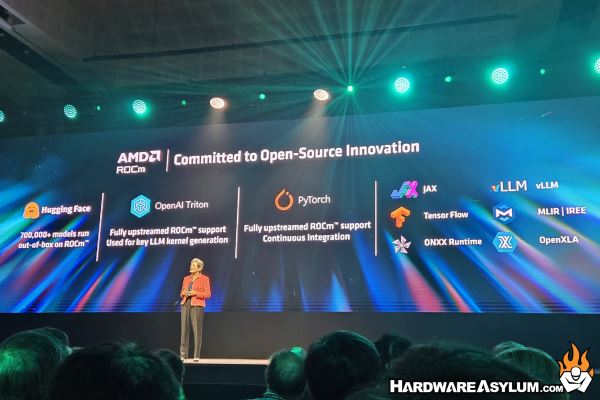

One of the common themes is how the AMD solutions are designed to be an open framework using open source software which not only allows developers to quickly recompile their work for the MI300X ROCm but also run faster than the current H100.

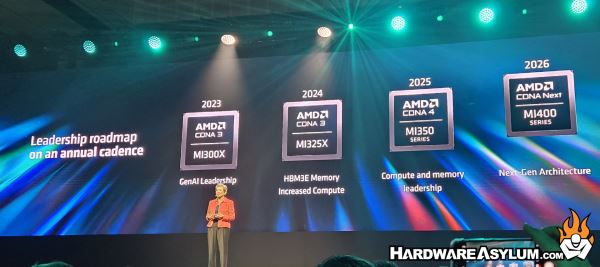

It is also interesting that AMD has chosen an annual cadence for their AI processor releases, likely to mirror NVIDIA but also to ensure they are reacting to the ever changing world of AI and AI tool development

Historically speaking open platforms has always been the preferred solution as it allows more companies to implement solutions without paying licensing fees or using propriety software solutions. Most of the time the open solution is less efficient however, standardization and lower costs are huge drivers when it comes to widespread adoption. This may not matter in the Generative AI space, or could be the only thing that does matter.